How do ChatGPT plugins (and similar LLM concepts) work?

The growing popularity of OpenAI’s ChatGPT has inspired efforts to overcome its limitations and enhance the potential of large language models (LLMs) for new use cases. But how does this work?

In a previous article, we explored how to incorporate your own data into LLMs through grounding. Today, we will delve into the concepts and architecture behind ChatGPT plugins and similar concepts for other LLMs. Understand how to integrate with multiple data providers for more comprehensive and informed responses.

Pick the right tool for the job. (Photo by Polesie Toys on Pexels)

Pick the right tool for the job. (Photo by Polesie Toys on Pexels)

1. Why does your language model need plugins?

Large language models are undoubtedly powerful, yet they may not excel at every task. Instead of answering directly, a LLM can perform multiple steps to gather the relevant information by incorporating plugins (also known as tools).

ChatGPT plugins [1], for example, can offer the following capabilities:

- Access up-to-date information, from public or private sources

- Execute code, perform complex calculations or data processing tasks

- Integration with third party services, execute tasks on another platform without leaving the chat

Overall, plugins enhance the capabilities of language models, making them more versatile and better equipped to address a wider range of tasks. From creating Jira tickets, to adding groceries to your online shopping cart, to retrieving real time sales data from Salesforce. The possibilities are endless.

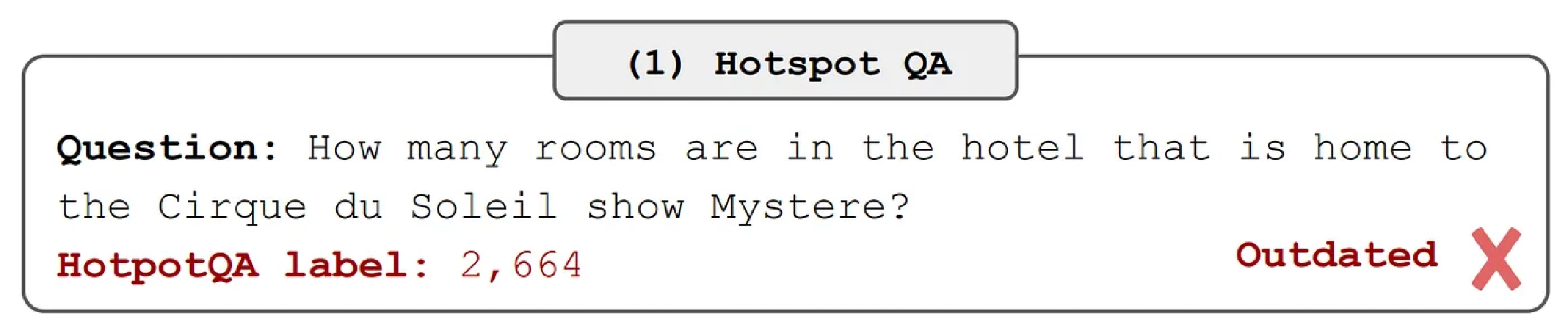

LLMs’ limits arise from dated training data. — (Image via ReAct on Arxiv)

LLMs’ limits arise from dated training data. — (Image via ReAct on Arxiv)

Extending LLMs with tools is vital for expanding their use-cases. Although ChatGPT has generated interest in LLMs through chat interfaces, chat may not be optimal for many tasks [2]. It is likely that these models will eventually be integrated directly into task-based processes and modern UIs, making cutting-edge tools essential for seamless implementation.

2. A scratchpad for your language model

Although ChatGPT Research Preview (chat.openai.com) isn’t open source, we can speculate how its plugins function by examining its documentation, relevant research papers and tools like Langchain, LLMIndex, and Semantic Kernel.

In the past, we developed chatbots that could identify consumer intent from inputs and route them to predefined actions (topics). LLMs can follow a similar approach, but with fewer preconfigured topics and a way more flexible format, allowing for a broader range of interactions and adaptability.

We begin with a prompt that includes two key elements: a list of tools and a structure which allows it to execute actions in a loop until the original question is answered. Each turn, the prompt is extended with new actions and the response of the LLM (completion). This is known as the ReAct (Reason + Act) paradigm [3], which is a variant of a MRKL (Modular Reasoning, Knowledge and Language) system [4].

Still sounds very abstract, right? How will this look in practice?

Comparison of 4 prompting methods, (a) Standard, (b) Chain-of-thought (CoT, Reason Only), © Act-only, and (d) ReAct (Reason+Act) — (Image via ReAct on Arxiv [3])

Comparison of 4 prompting methods, (a) Standard, (b) Chain-of-thought (CoT, Reason Only), © Act-only, and (d) ReAct (Reason+Act) — (Image via ReAct on Arxiv [3])

The image above, from the ReAct research paper [3], shows the step-by-step ReAct method used to find an answer. In the background it has been initiated with a prompt that lists all tools it can use, and a space that the LLM can use as a scratchpad to write down the steps it takes.

Upon receiving the input, the LLM produces a completion that guides the next action. After recording the action and noting its outcome, the process repeats until the task is complete, signaled by a special FINISH action. This iterative method allows the language model to efficiently tackle tasks while utilizing the available tools.

A starting prompt for this method might look like this, with new thoughts, actions and observations added at each step along the way:

PREFIX = """Assistant is a large language model trained by OpenAI. [...]

TOOLS:

------

Assistant has access to the following tools: Document Search, Power BI executor

Document Search is useful when users want information from company documents.

Power BI executor is useful when users want information that can be queried from internal Power BI datasets..

"""

FORMAT_INSTRUCTIONS = """To use a tool, please use the following format:

Thought: Do I need to use a tool? Yes Action: the action to take, should be one of [Document Search, Power BI executor] Action Input: the input to the action Observation: the result of the action

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

Thought: Do I need to use a tool? No {ai_prefix}: [your response here]

SUFFIX = """Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}

"""

Example prompt used by LangChain (source)

This serves as an example of how various tools can be integrated into your LLM. To gain a deeper understanding of the rationale behind similar paradigms and architectures, consider exploring research papers such as ReAct [3], MRKL [4] and Toolformer [5].

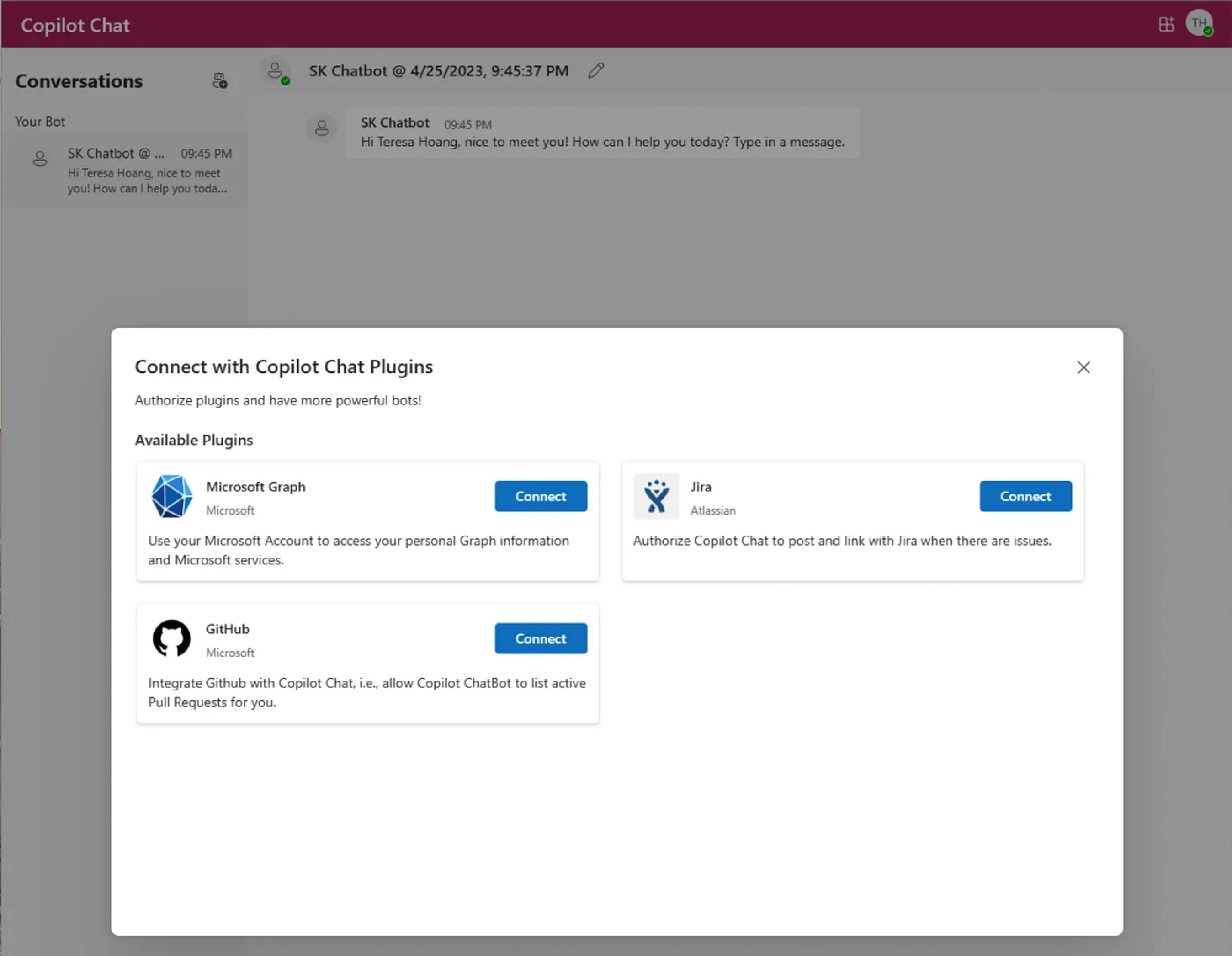

Example of an open-source chat interface that supports Chat Plugins. (Source: Semantic Kernel on GitHub).

Example of an open-source chat interface that supports Chat Plugins. (Source: Semantic Kernel on GitHub).

3. Build an agent that supports plugins

If you’re not utilizing the public research preview of ChatGPT (chat.openai.com) and prefer using the (Azure) OpenAI API or another LLM, you’ll have to incorporate the patterns described earlier yourself. Fortunately, numerous frameworks have already implemented these concepts. Currently, LangChain, LlamaIndex (formerly GPTindex), and Semantic Kernel are the most sought-after tools for this purpose.

Keep in mind that naming conventions may vary across frameworks. LangChain includes agents implementing the ReAct logic along with various tools, while Semantic Kernel supports skills. The advantage of LangChain and similar SDKs over ChatGPT plugins is their compatibility with most available LLMs and their support for local skills which reduce the latency.

The following resources can serve as a starting point:

- OpenAI cookbook — How to build a tool-using agent with LangChain (notebook)

- Semantic Kernel: Skills Overview

- OpenAI and Power BI Datasets to provide a new interface to your business data, an example of how such concepts can work to solve a business need

At present, these methods require a code-first approach, with open-source frameworks assisting in accelerating your progress. It is anticipated that plugin support will be extended to the (Azure) OpenAI API, simplifying the integration of plugins into an LLM agent without coding. Once this feature is available, you can concentrate on the functionality offered by plugins rather than the complete agent architecture.

Tweet from Adam Goldberg, Head of Azure OpenAI EnablementCurrently plugins are a ChatGPT thing. Expectation is they will come to the API eventually.

— Adam.GPT (@TheRealAdamG) April 27, 2023

4. Provide your own “AI plugins”

In a nutshell, a plugin that can be used by LLMs like ChatGPT functions as a webservice (API), consisting of three primary elements: an HTTPS endpoint, an OpenAPI specification, and a plugin manifest. The OpenAPI specification and manifest provide detailed descriptions for each part of the webservice, outlining its potential uses. This enables LLMs to comprehend the available options through ReAct (as discussed before).

Are you considering offering a plugin in the future? Begin by developing a robust API, together with an OpenAPI specification. This will place you among the growing number of companies that are already investing in such plugins.

The first plugins have been created by Expedia, FiscalNote, Instacart, KAYAK, Klarna, Milo, OpenTable, Shopify, Slack, Speak, Wolfram, and Zapier. ChatGPT plugins announcement, March 23, 2022

If you already have an API in place, carefully determine the endpoints you wish to expose and identify the potential use-cases they could serve. Commit to crafting a comprehensive OpenAPI specification and manifest, ensuring that it clearly explains — in human language— the capabilities of each endpoint and the necessary input information.

Resources that can help you get started building plugins:

- Getting Started — OpenAI API — understand the basics of a ChatGPT plugin

- OpenAI Plugins Quickstart — get a ToDo list plugin up and running in under 5 minutes (Python)

- OpenAI ChatGPT retrieval plugin —plugin that enables integration with personal or organizational documents

If you are the developer of the chat agent, you also have the option to adopt a more localized approach, combining it with frameworks such as LangChain or Semantic Kernel. While this may reduce latency, it increases complexity, as you will be responsible for managing a larger portion of the infrastructure.

Do we need a new standard for AI Plugins? Please not! (Image by xkcd)

Do we need a new standard for AI Plugins? Please not! (Image by xkcd)

Conclusion

Innovations like chain of thought prompting techniques are evolving rapidly. As explored in this article, these methods have demonstrated considerable improvements in LLM performance. However, there is ample room for optimization, making this an exciting and dynamic field to watch.

Recent releases such as AutoGPT, JARVIS (HuggingGPT), BabyAGI have demonstrated that we are just at the beginning. Will we soon see bots that run fully autonomously with unlimited tools? I doubt it. While these research papers and code show tremendous potential, short-term gains are more plausible in use-cases with limited scope and a select set of tools.

Plugins will allow you to use LLMs for more scenarios, but it is essential to consider your specific use case and the platform that best suits your users’ needs. Chat is not always the solution, LLMs can be powerful in many different forms.

What are your thoughts on this topic? Do you agree or disagree? Share your thoughts in the comments and join the discussion. Thanks for reading!

If you enjoyed this article, feel free to connect with me on LinkedIn, GitHub or Twitter.

References

[1] ChatGPT Plugins — OpenAI. May 2023, https://openai.com/blog/chatgpt-plugins

[2] Amelia Wattenberger_, Why Chatbots Are Not the Future_. May 2023, https://wattenberger.com/thoughts/boo-chatbots

[3] Yao, S., Zhao, J., Yu, D., Du, N., Shafran, I., Narasimhan, K., Cao, Y. “ReAct: Synergizing Reasoning and Acting in Language Models” (2023), arXiv:2210.03629

[4] Karpas, E., Abend, O., Belinkov, Y., Lenz, B., Lieber, O., Ratner, N., Shoham, Y., Bata, H., Levine, Y., Leyton-Brown, K., Muhlgay, D., Rozen, N., Schwartz, E., Shachaf, G., Shalev-Shwartz, S., Shashua, A., Tenenholtz, M. “MRKL Systems: A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning” (2022),arXiv:2205.00445

[5] Schick, T., Dwivedi-Yu, J., Dessì, R., Raileanu, R., Lomeli, M., Zettlemoyer, L., Cancedda, N., Scialom, T. “Toolformer: Language Models Can Teach Themselves to Use Tools” (2023), arXiv:2302.04761